A complex CPU system often processes sets of data in the hopes of relaying this information to vendors. Many companies utilize a multitude of processors to complete this task. One of the best ways to ensure that all sets of data are correctly processed is by using the method of processing things through parallel methods.

There are many ways that you can maximize parallel computer processing for your company’s performance benefit. Here are a few of the ways to accomplish this.

Table of Contents

1. Processing Nodes

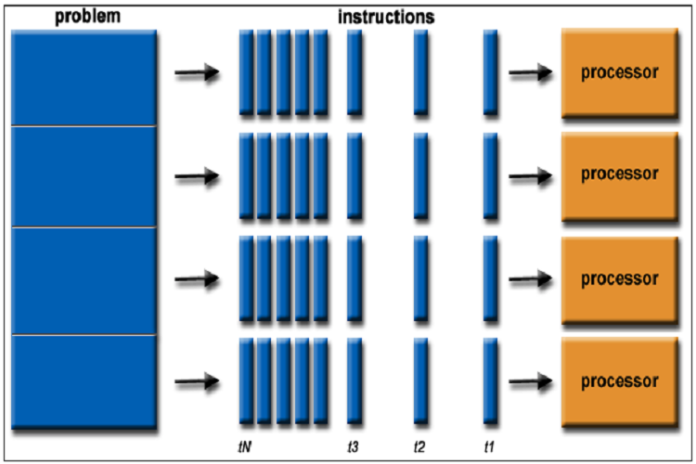

Though it does help to be a computer scientist when it comes to breaking down the various aspects of parallel processing, you can do this on your own. First, it helps to have a basic understanding of what this process is. At its basic level, processing on a parallel level involves using several processors to process many calculations or processes. These are performed simultaneously, and using this method, significant problems are often divided into smaller problems. At this point, the issues can be solved concurrently, as realized by data science software industry leader TIBCO.

This allows for computations to proceed with high performance, as the parallel system can break down these various parts into smaller ones, as already mentioned. Another form of parallel processing involves processing data on a massively parallel level. This is represented by a processing paradigm where hundreds to thousands of processing nodes work in tandem. They work to allow these parts of a computational task to work in parallel. Each of these various components works through individual instances of an operating system. An example of this would be how an insurance company uses a few processors in parallel systems to keep up with all the customer data on-site.

One of the first steps to take when dealing with this form of processing is processing data. These are the small components of bit-level parallelism. The basic building blocks of this process are simple components that include one more processing unit.

2. High-Speed Interconnect

Another way that you can maximize processing on a parallel level is through a high-speed interconnect. Using this method begins at the node level. When you have a parallel system working on computing data, these systems work parallelly on parts of one computation problem. Though they are independent of one another, they still need to conduct regular communication with each other.

In cases like these, you need low latency, high bandwidth connections between the nodes. This is where the function of a high-speed interconnect comes in. This type of interconnect can come in an ethernet connection, fiber distributed data interface, or any other proprietary connection method. This helps to maximize the data processing across a computer network.

3. Distributed Lock Manager (DLM)

When it comes to a distributed lock manager (DLM), we’re focusing on the part of the parallel process where resource sharing is coordinated. For example, this happens in parallel architectures where the external memory is shared among nodes.

The DLM will make a request for resources from a variety of nodes. They will then connect those same nodes when the resources become available. The DLM is also great because it’ll make sure that the set of data stays consistent. The distributed lock manager is a great tool that can maximize the process on the parallel levels of a computer’s system.

4. Massively Parallel Processed Architecture(s)

With this type of architecture, you focus on two major groups based on how nodes share their resources. This involves your method of computing of running two or more processors (CPUs). The goal is to utilize parallel computer architecture to handle separate parts of a particular task. This data-based architecture is a great way to maximize the processing of these different data streams.

5. Shared Disk Systems

Working from the base level in a shared disk system, these components will have one or more CPUs. In addition to this, they will also have an independent random-access memory (RAM). Using these shared disk systems will depend on a high-speed interconnects bandwidth, in addition to the hardware constraints. Through its data storage functions, shared disk systems perform a must-needed tool for maximizing processing data in a parallel structure.